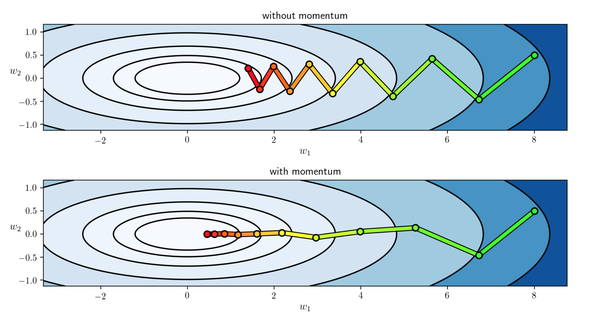

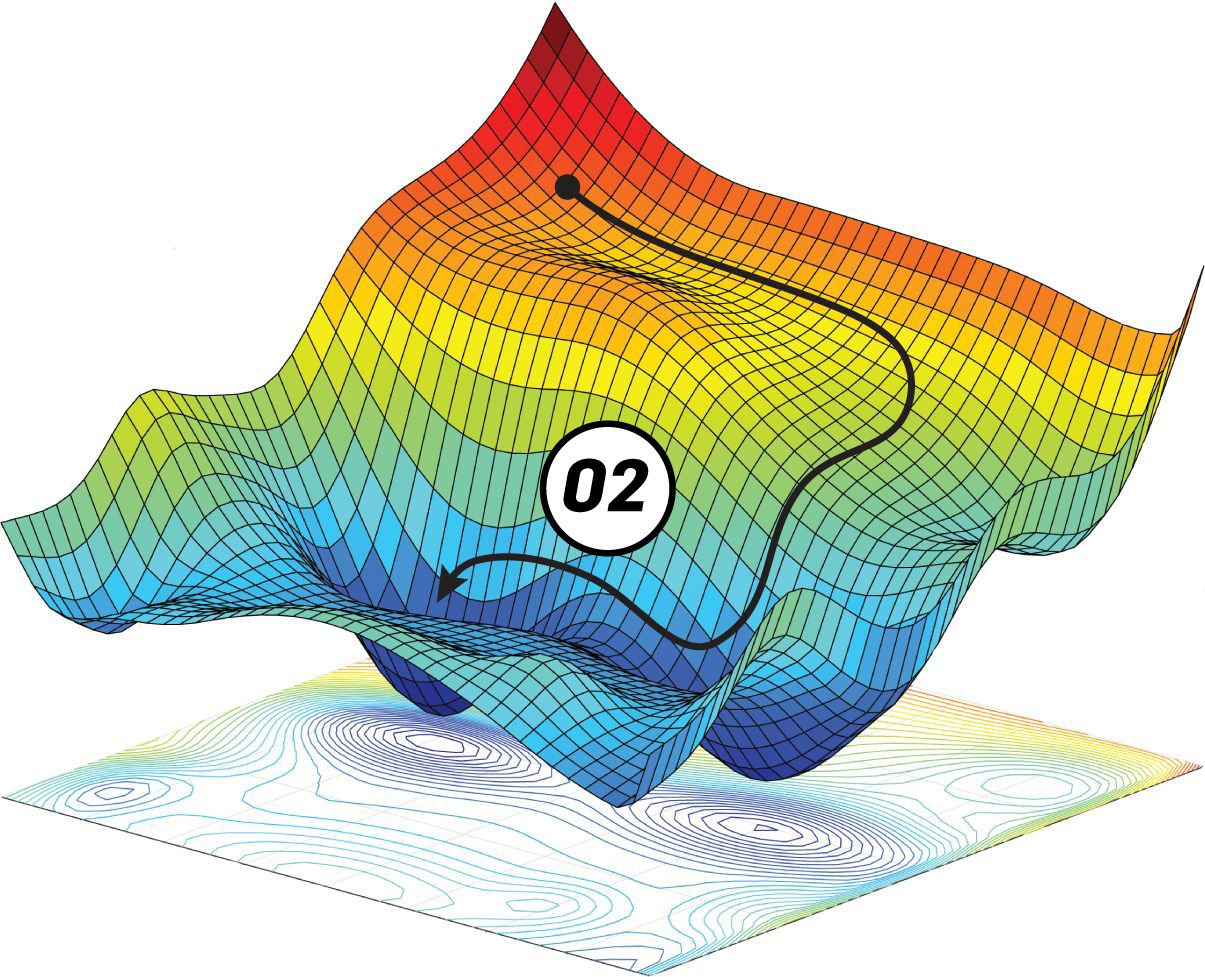

They are Batch Gradient Mini-Batch Gradient Stochastic Gradient As discussed earlier, our aim is to approach the lowest point on the cost. In the same figure, if we draw a tangent at the green point, we know that if we are moving upwards, we are moving away from the minima and vice versa. There are three variants of the Gradient Descent algorithm. We will talk about this in more detail in the latter part of the article. So, if we can compute this tangent line, we might compute the desired direction to reach the minima. The slope is described by drawing a tangent line to the graph at the point. A derivative is a term that comes from calculus and is calculated as the slope of the graph at a particular point. Gradient Descent Algorithm helps us to make these decisions efficiently and effectively with the use of derivatives. which way to go and how big a step to take.

Also, the squared differences increase the error distance, thus, making the bad predictions more pronounced than the good ones. lasagne's, caffe's, and keras' documentation). At the same time, every state-of-the-art Deep Learning library contains implementations of various algorithms to optimize gradient descent (e.g.

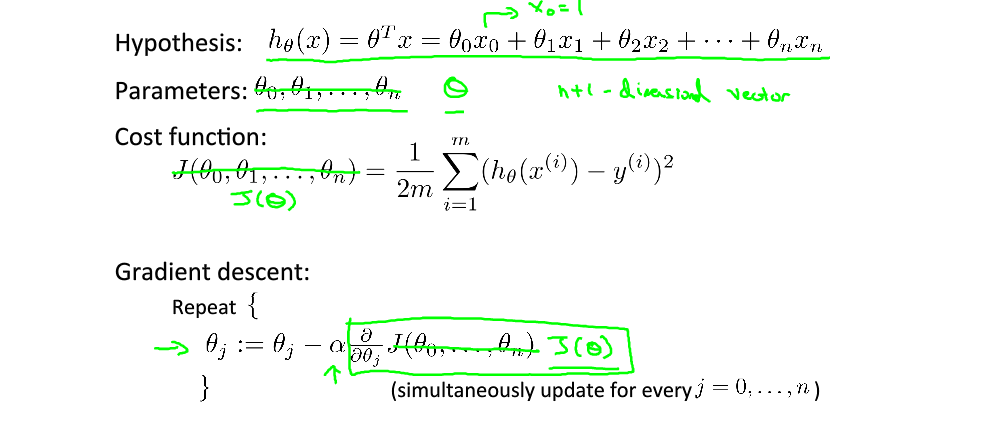

Indeed, to find that line we need to compute the first derivative of the Cost function, and it is much harder to compute the derivative of absolute values than squared values. Gradient descent is one of the most popular algorithms to perform optimization and by far the most common way to optimize neural networks. Why do we take the squared differences and simply not the absolute differences? Because the squared differences make it easier to derive a regression line.

0 kommentar(er)

0 kommentar(er)